- Methods for Big Data

A UAV-based dataset for plant monitoring in maize fields

By Ekin Celikkan and Nadja Klein, posted on February 21, 2025

This blog post is about our paper WeedsGalore: A Multispectral and Multitemporal UAV-based Dataset for Crop and Weed Segmentation in Agricultural Maize Fields (WACV 2025), and interdisciplinary work between researchers from Karlsruhe Institute of Technology and GFZ Helmholtz Centre for Geosciences.

The paper can be found here. The accompanying code and data are available on GitHub.

The paper can be found here. The accompanying code and data are available on GitHub.

Funded by:

What is the paper about?

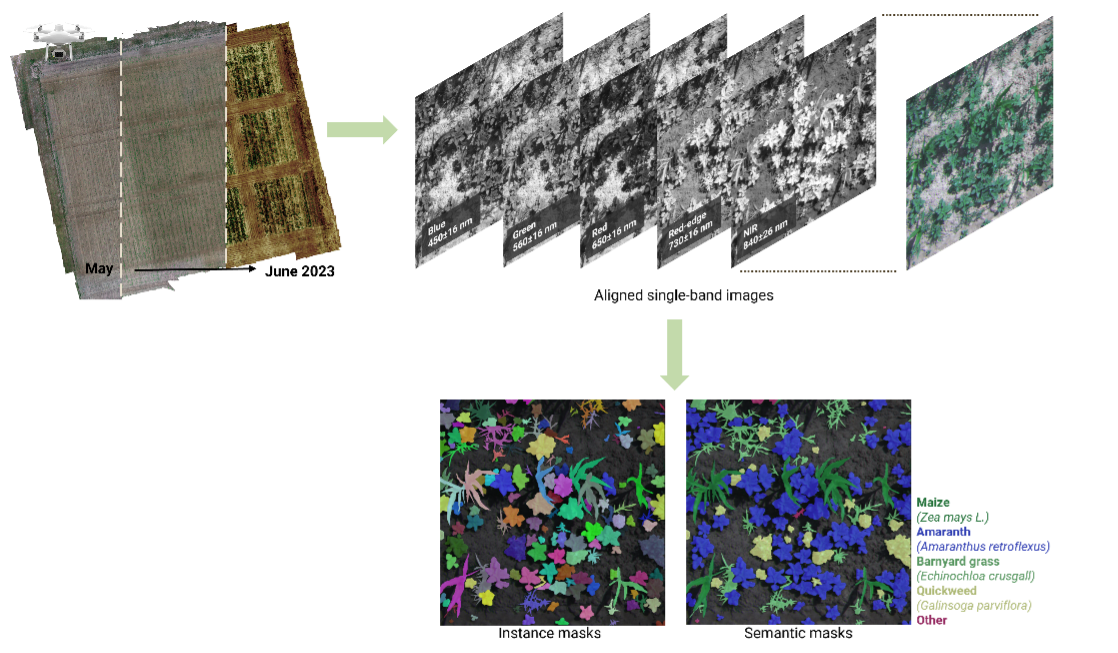

WeedsGalore contains low-altitude remote sensing images from a real-world agricultural cropland, which are captured by a UAV equipped with a multispectral sensor. The five available channels correspond to Red, Green, Blue, Near-infrared, and Red Edge wavelengths. The dataset includes fine instance and semantic annotations for maize (corn) and four common weed species. When used to train state-of-the-art deep neural networks, it yields models that can effectively monitor weeds in agricultural maize fields.

Motivation

Weed management is an important component of agricultural practices to maximize yield and for sustainable production. It requires identification, localization, and monitoring of weeds in croplands. Tackling this task manually is naturally very arduous and inefficient. Hence, automated approaches are needed and are being developed. As in many other areas, high quality datasets are important for developing data-driven methods as well as testing and benchmarking model performance. Our dataset aims to enable those for UAV-based monitoring in maize fields.WeedsGalore Dataset

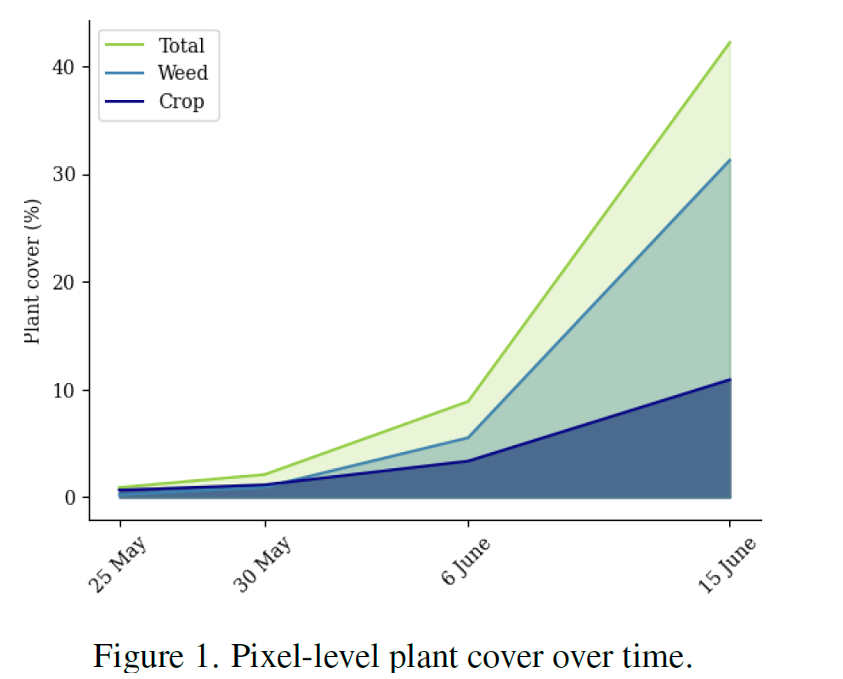

The data comes from a field in Germany, where high resolution images were collected from 5-meter flight altitude with a drone with a multispectral camera. The dataset contains five single-band (red, green, blue, near-infrared, red edge) aligned images, and semantic and instance masks for maize (corn) and four other weed species. One of the key characteristics of the dataset is the variability of plants. The recordings were repeated on multiple dates, which means that different growth stages of both crops and weeds are represented. Another important feature of our dataset is the plant density, in terms of both instances and pixel cover. Especially regarding weeds, compared to existing UAV-based weed monitoring datasets, WeedsGalore offers an exceptionally high number of plant instances, which makes the dataset not only a rich but also a challenging one.

Experiments

Firstly, we obtain baseline results on our dataset. We split the field into train, validation and test areas, which correspond to the data splits. We use a CNN (DeepLabv3+ [2]) and a transformer (MaskFormer [3]) based architecture to show performance for uni-weed (i.e. all weed types are considered as one class), multi-weed and instance segmentation scenarios. Secondly, we deploy a Bayesian version of the same CNN-based architecture for the semantic segmentation task. This widely used method, namely Variational Inference with MC Dropout [4], gives an approximation for the predictive posterior distribution. This way, not only we can obtain uncertainty (or confidence) scores related to the model predictions, but we also observe an improvement in model performance and calibration. Lastly, we test the model trained on WeedsGalore on reference data from another unseen maize field. While both models (deterministic and probabilistic versions) yield good results, an astounding outcome in the performance improvement by the Bayesian model.Final Thoughts

UAVs are very promising platforms for weed management and monitoring, especially in very large croplands. They are non-invasive and can quickly cover extensive areas. I think this dataset, which focuses on maize, is a useful and effective resource for research and applications in this direction. If you are interested and want to try it out yourself, check out the GitHub repository!References

[1] Celikkan E., Kunzmann T., Yeskaliyev Y., et al. (2025). WeedsGalore: A Multispectral and Multitemporal UAV-based Dataset for Crop and Weed Segmentation in Agricultural Maize Fields. In Proceedings of the Winter Conference on Applications of Computer Vision (WACV); 4767-4777.[2] Chen L.-C., Zhu Y., Papandreou G., et al. (2018). Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV); 801–818.

[3] Cheng B., Schwing A., Kirillov A. (2021). Perpixel classification is not all you need for semantic segmentation. Advances in Neural Information Processing Systems (NeurIPS); 34: 17864–17875.

[4] Gal Y., Ghahramani Z. (2016). Dropout as a Bayesian approximation: Representing model uncertainty in deep learning. In Proceedings of the International Conference on Machine Learning (ICML); 1050–1059.

For questions, comments or other matters related to this blog post, please contact us via kleinlab@scc.kit.edu.

If you find our work useful, please cite our paper:

@inproceedings{CelKunYesItzKleHer2025,

title={WeedsGalore: A Multispectral and Multitemporal UAV-based Dataset for Crop and Weed Segmentation in Agricultural Maize Fields},

author={Ekin Celikkan and Timo Kunzmann and Yertay Yeskaliyev and Sibylle Itzerott and Nadja Klein and Martin Herold},

year={2025},

booktitle={Proceedings of the Winter Conference on Applications of Computer Vision (WACV)},

pages={4767--4777},

}