- Methods for Big Data

Building Blocks for Robust and Effective Semi-Supervised Real-World Object Detection

By Moussa Kassem Sbeyti and Nadja Klein, posted on March 27, 2025What is the paper about?

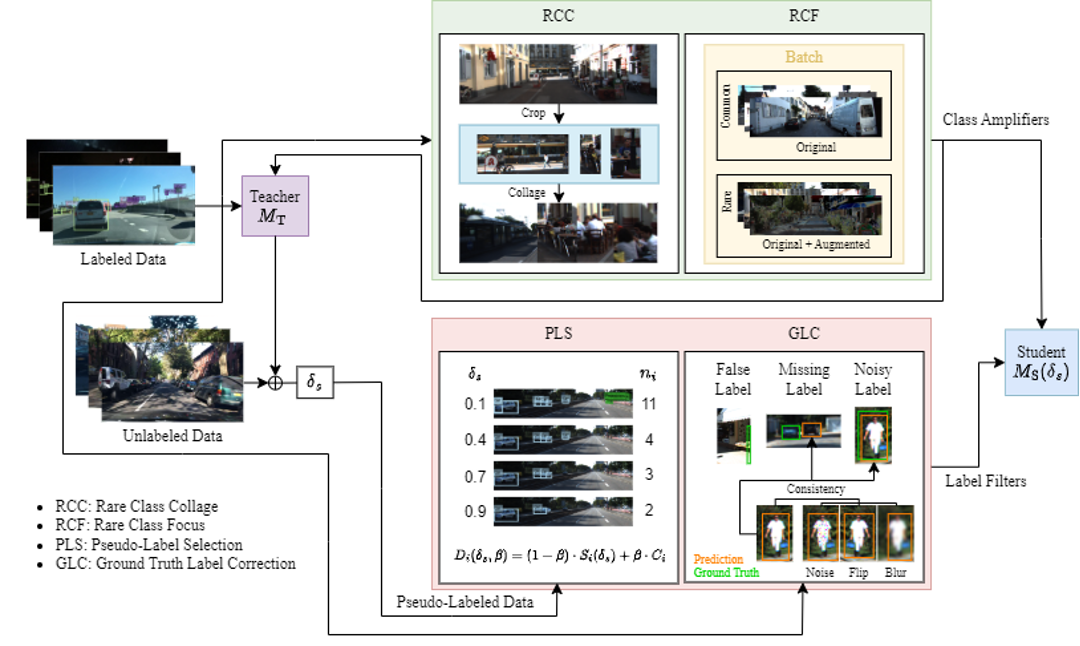

Object detection models require vast amounts of labeled data, but acquiring such datasets is expensive and labor-intensive. Semi-supervised object detection (SSOD) addresses this issue by leveraging both labeled and unlabeled data. However, real-world applications suffer from three major challenges:- (1) class imbalance leading to poor generalization,

- (2) noisy or incorrect ground truth labels, and

- (3) missing or inaccurate pseudo-labels that propagate errors.

- Rare Class Collage (RCC): A data augmentation method that increases rare class representation by creating collages of rare objects.

- Rare Class Focus (RCF): A batch sampling strategy that ensures rare classes are consistently represented in training.

- Ground Truth Label Correction (GLC): A refinement method that identifies and corrects false, missing, and noisy ground truth labels using teacher model consistency.

- Pseudo-Label Selection (PLS): A filtering approach that removes low-quality pseudo-labeled images based on missing detection rates while considering class rarity.

Motivation

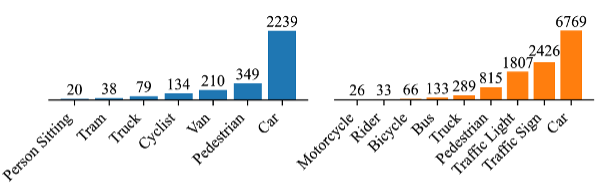

While pseudo-labeling is a powerful tool in SSOD, its effectiveness is limited by the quality of labeled and pseudo-labeled data. Many real-world datasets exhibit class imbalance (Fig.2), where common objects dominate training data, leaving rare classes poorly represented. Furthermore, label noise (Fig.3), including incorrect or missing ground truth labels, compromises model learning. Finally, pseudo-labels generated by the teacher model are often incomplete or incorrect (Fig.4), particularly for underrepresented classes, leading to error propagation.Addressing these challenges is critical for making SSOD frameworks applicable in practical settings such as autonomous driving and industrial inspection.

Theory

Enhancing Class Representation- Rare Class Collage (RCC)

- Instead of re-sampling entire images, RCC crops rare class objects and composes collages, ensuring their sufficient presence during training.

- This prevents overfitting to common classes and improves generalization.

- Rare Class Focus (RCF)

- RCF modifies batch composition to always include at least one rare class instance per batch.

- Compared to standard batch sampling, this results in the consistent inclusion of rare objects.

- Ground Truth Label Correction (GLC)

- GLC refines labels by leveraging inference-time augmentation and teacher model consistency.

- This improves the quality of the often false, missing, or noisy ground truth labels.

- Pseudo-Label Selection (PLS)

- PLS introduces a class-aware missing detection rate metric, ensuring only pseudo-labels with valuable learning signals are retained.

- Compared to traditional confidence-based pseudo-label filtering, this accounts for class rarity and missing objects.

Experiments

We have conducted experiments on autonomous driving datasets (KITTI and BDD100K) using an EfficientDet-D0-based SSOD framework. Our key results are as follows.- RCC and RCF significantly improve rare class detection without negatively impacting common class performance.

- GLC effectively corrects false and missing labels, boosting accuracy in challenging datasets.

- PLS reduces error propagation by filtering out low-quality pseudo-labels, enhancing student model performance.

- Combining all four building blocks yields up to a 6% improvement in SSOD performance compared to baseline models.

Final Thoughts

SSOD is a promising approach for reducing reliance on extensive labeled datasets. However, real-world deployments require methods to address class imbalance, improve label quality, and refine pseudo-label selection. Our paper investigates the effects of the latter on SSOD and proposes four modules: RCC, RCF, GLC, and PLS, that provide effective solutions with minimal computational overhead. These techniques can be seamlessly integrated into SSOD pipelines, making them applicable across diverse object detection tasks.Our work demonstrates that data-centric enhancements are crucial for making SSOD viable for real-world applications.

The code is available here.

References

[1] Sohn K., Zhang Z., Li C.-L., et al. (2020). A simple semi-supervised learning framework for object detection. arXiv preprint arXiv:2005.04757.[2] Tan M., Pang R., Le Q. V. (2020). Efficientdet: Scalable and efficient object detection. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 10778–10787.

[3] Yu F., Chen H., Wang X., et al. (2020). Bdd100k: A diverse driving dataset for heterogeneous multitask learning. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2636–2645.

[4] Geiger A., Lenz P., Urtasun R. (2012). Are we ready for autonomous driving? the kitti vision benchmark suite. In IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 3354–3361.

For questions, comments or other matters related to this blog post, please contact us via kleinlab@scc.kit.edu.

If you find our work useful, please cite our paper:

@article{KasKleNowSivAlb2025,

title={Building Blocks for Robust and Effective Semi-Supervised Real-World Object Detection},

author={Moussa Kassem~Sbeyti and Nadja Klein and Azarm Nowzad and Fikret Sivrikaya and Sahin Albayrak},

journal={Transactions on Machine Learning Research},

issn={2835--8856},

year={2025},

url={https://openreview.net/forum?id=vRYt8QLKqK}

}