- Methods for Big Data

Uncertainty-Aware Trajectory Prediction via Rule-Regularized Heteroscedastic Deep Classification

By Christian Schlauch and Nadja Klein, posted on July 18, 2025This blog post presents our paper, Uncertainty-Aware Trajectory Prediction via Rule-Regularized Heteroscedastic Deep Classification, which was published at Robotics: Science and Systems (RSS) 2025. The work is the result of a collaboration with the Continental AI Lab Berlin. The paper can be found here and the code is going to be published here.

What is the paper about?

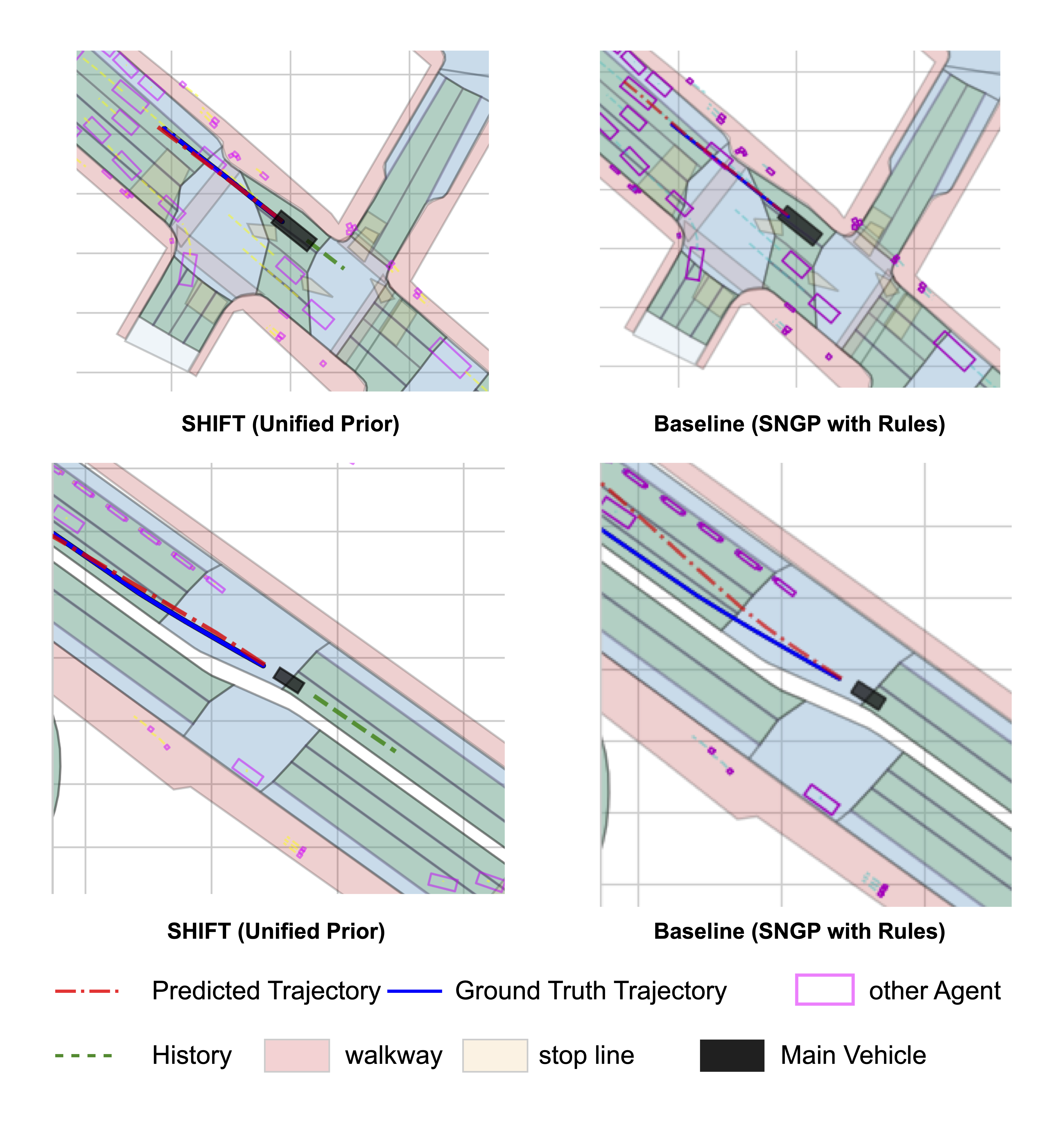

We propose SHIFT (Spectral Heteroscedastic Informed Forecasting for Trajectories), a novel training framework for motion prediction in autonomous driving. SHIFT combines well-calibrated uncertainty modeling through last-layer heteroscedastic Gaussian processes and informative priors derived from automated rule extraction.

Motivation

Uncertainty-aware, accurate motion prediction is an important cornerstone to enable safe and comfortable driving. In our previous blog post, we demonstrated how integrating prior knowledge in the form of rules can guide deep learning-based motion predictors, improving both uncertainty calibration and data efficiency. In this paper, we advance our approach by enhancing uncertainty modeling and introducing automatic rule extraction, which further improves scalability.Theory

Our automatic rule extraction leverages a Large-Language Model (LLM) to create a Python function based on a prompt that includes a natural language description. This process is enhanced with Retrieval-Augmented Generation (RAG), providing the LLM with access to the NuScenes dataset interface (see Fig. 2). The Python function can be reviewed and validated by domain experts before being used to label candidate trajectories within a NuScenes data sample.

These synthetic training labels are used in the first training stage of our SHIFT framework (see Fig. 1). Our model builds upon the CoverNet architecture [1], which formulates motion prediction as a trajectory anchor classification task. To ensure a distance-sensitive representation, we employ a spectral-normalized ResNet-50 as the feature extractor, projecting inputs into a compact intermediate embedding space.

In place of the standard output layer, we integrate a heteroscedastic Gaussian process [2] (see Fig. 3), enabling more effective estimation of both aleatoric and epistemic uncertainty. After completing the first training stage, we use the estimated epistemic uncertainty as an informative prior to regularize the second training stage, where the model is trained on real-world observations.

Experiments

In our experiments, we integrate two types of rules:- Drivability: Vehicles should never leave the road boundaries!

- Stop-Rules: Vehicles should not cross red lights and used pedestrian walkways!

Final Thoughts

SHIFT demonstrates that the integration of prior knowledge rules with uncertainty modeling can offer significant advantages in safety-critical domains like autonomous driving. We are excited to explore this framework further by applying it to other motion prediction architectures and by improving the reliability of automatic rule extraction using the latest advances in language models. We are also looking forward to apply our approach to other domains where data is limited but domain expertise is abundant.References

[1] Phan-Minh T. (2020). Covernet: Multimodal behavior prediction using trajectory sets. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2020: 14062-14071[2] Fortuin V. (2022) Deep classifiers with label noise modeling and distance awareness. Transactions on Machine Learning Research (TMLR); 2022

[3] Caesar H. (2020) NuScenes: A multimodal dataset for autonomous driving. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2020: 11621-11631

For questions, comments or other matters related to this blog post, please contact us via kleinlab@scc.kit.edu.

If you find our work useful, please cite our paper:

@misc{ManSchPasWirKle2025,

author={Kumar Manas and Christian Schlauch and Adrian Paschke and Christian Wirth and Nadja Klein},

title={Uncertainty-Aware Trajectory Prediction via Rule-Regularized Heteroscedastic Deep Classification},

archivePrefix={arXiv},

eprint={2504.13111},

url={https://arxiv.org/abs/2504.13111},

year={2025},

}